A photometry calibration, implemented by Munipack, is on base of relation between detected counts and expected photons as the natural consequence of use of photon counting detectors.

The traditional way for calibrating of optical observations is to derive, so called, instrumental magnitudes from some observed quantity. A magnitude offset, between both instrumental and catalogue magnitudes, represents the calibration.

Munipack offers an alternative approach. Magnitudes of calibration stars are converted to photons and the calibration establishes a relation between expected amount of photons and observed counts.

The photon approach has been chosen for two reasons. For the principal reason, the physical quantity which is detected by modern detectors are photons. For the second reason: statistical properties are much more suitable for robust statistics.

Why photons? Common modern devices detects photons. Its energy and wavelength doesn't matter (at least for an ideal detector). The amount of detected photons is quantity designed as the counts of events that appeared in detector. An ideal detector has amount of counts equal to amount of detected photons.

Light is composed from electromagnetic waves which carries an energy emited by sources. The connection between the energy E of n photons for a single wave with frequency ν is established by Planck's relation:

E = n h ν

The energy E can be measured by a calorimeter (bolometer) while photons n are collected by digital cameras or photomultipliers.

In astronomical photometry, we are collecting the energy or photons for a time interval T falling on an area A. To get values independent on the factors we are normalising the (specific) quantities. The energy per unit of time and area is replaced by energy flux

E / T A → F

and photons by photon flux

n / T A → Φ.

By using the substitutions, Planck's relation gets the form

F = Φ h ν

A relation between between energy flux F and the apparent magnitude m in a filter can be determined (inverse of Pogsons's equation) as

F = fν0 Δν ‧ 10-m/2.5

where fν0 is a reference flux density (per frequency) and Δν is the frequency width of the filter (the filter is modelled as a rectangle). The product fν0 Δν is flux throughout given filter. The h ν is energy of single photon. For photon flux, the mean number of photons is flux per photon energy, we have

Φ = (fν0 Δν) / (h ν) ‧ 10-m/2.5

Of course, photon flux can be also expresed in terms of wavelengts. Use standard relation between frequency and wavelength

ν = c / λ,

form the flux as

Φ = (fλ0 Δλ) / (h c / λ) ‧ 10-m/2.5.

Just for illustration, number of photons falling on square meter per second in Johnson's V filter (like eye's sensitivity) is summarised in following table (constants approved fν0 = 4 ‧ 10-11 W/m2/nm, Δλ = 70 nm, λ = 550 nm).

| magnitude | energy flux [W/m2] | photon flux [ph/s/m2] | example |

|---|---|---|---|

| 0 | 10-9 | 1010 | Vega |

| 5 | 10-11 | 108 | naked eye limit |

| 10 | 10-13 | 106 | bright quasars |

| 15 | 10-15 | 104 | Kuiper belt objects |

| 20 | 10-17 | 102 | optical afterglows |

| 25 | 10-19 | 1 | Earth telescope limit |

A knowledge of magnitudes of standard stars can be used to compute expected photon flux and also count of photons for our observations.

n = A T Φ

and we can compare it with actually observed photons c = g d (where g is gain and d number of events given by our instrument):

η = c / n

which determines a sensitivity of both our and a standard instrument. The ratio has meaning of light effectivity of full device (detector, optical apparatus, atmosphere together).

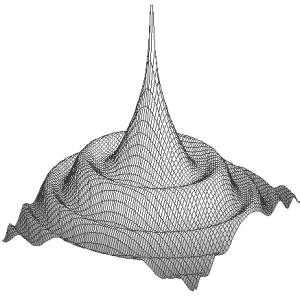

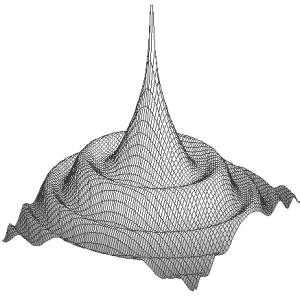

Both catalogue n and measured quantities c are determined with a certain uncertainity. Main source of the uncertainity comes from properties of detection mechanism of photons which is known as Poisson distribution. The statistical error is related to count of detected photons as σ² = c as can be see on simple numerical experiment. As one can see, the uncertainity depends on calibration star brightness which is absolutely strange for common experiences with regular meassurements (time, lenght).

Direct computation of mean of ratio c/n is slightly uncorrect because measurements has principially huge diffrences in precision. Therefore, we are using the transformation to a new variable

(n - c/η) / σ

which has mean value 0 and dispersion 1. This is mathematically little bit complicated way because detremination of η requires solution of implicit non-linear equation.

The very hearth of calibration is determining of the ratio and the constant η from a set of stars. The prerequisites leads to minimisation the function

Σi ρ[(ni - r ci) / σi]

where σ² = r c + σ²x + … (Poisson and others sources of noise), the unknown parameter r = 1 / η and function ρ is a robust function (classic χ2 or least squares has non-robust version of ρ[x] as x2).

If the parameter r = 1 / η is known, all objects can be transformed to standard photon counts:

ni = r ci

and also to fluxes or magnitudes.

The photon calibration approach is common to high-energy astrophysics, the flux-based for radio-astronomy and magnitude based to (near-)optical astronomy. Important advantages are:

Why magnitudes are confusing? Because bright objects has negative magnitude. Sum means products. Magnitude increases with distance. Magnitudes are both relative and absolute quantity. Magnitudes has no units. There are none magnitude detectors.

The basic photometry tool is phcal which computes calibration ratio r=1/η coded by CTPH keyword and creates a new frame with values in photons (not counts). The frame has both photometry table and image values calibrated in photons.

Manuals: Aperture Photometry, Photometry Calibration, Photometric corrections. Data Formats: Time Series Tables.